Or try one of the following: 詹姆斯.com, adult swim, Afterdawn, Ajaxian, Andy Budd, Ask a Ninja, AtomEnabled.org, BBC News, BBC Arabic, BBC China, BBC Russia, Brent Simmons, Channel Frederator, CNN, Digg, Diggnation, Flickr, Google News, Google Video, Harvard Law, Hebrew Language, InfoWorld, iTunes, Japanese Language, Korean Language, mir.aculo.us, Movie Trailers, Newspond, Nick Bradbury, OK/Cancel, OS News, Phil Ringnalda, Photoshop Videocast, reddit, Romanian Language, Russian Language, Ryan Parman, Traditional Chinese Language, Technorati, Tim Bray, TUAW, TVgasm, UNEASYsilence, Web 2.0 Show, Windows Vista Blog, XKCD, Yahoo! News, You Tube, Zeldman

Mistral turns focus toward regional LLMs with Saba release | InfoWorld

Technology insight for the enterpriseMistral turns focus toward regional LLMs with Saba release 18 Feb 2025, 11:07 am

French AI startup Mistral is turning its focus toward providing large language models (LLMs) that understand regional languages and their parlance as a result of rising demand among its enterprise customers.

“Making AI ubiquitous requires addressing every culture and language. As AI proliferates globally, many of our customers worldwide have expressed a strong desire for models that are not just fluent but native to regional parlance,” the company wrote in a blog post.

Explaining further, it said that while larger LLMs are more general purpose and often proficient in several languages, they often fail to understand the usage of words in a certain language or lack understanding of the cultural background, which leads to failure of servicing use cases in local languages.

Some examples of these use cases could be conversational support, domain-specific expertise, and cultural content creation.

Mistral believes that LLMs that are custom-trained in regional languages can help service these use cases as the custom training would help an LLM “grasp the unique intricacies and insights for delivering precision and authenticity.”

Mistral’s first custom-trained regional language LLM

Mistral has released its first custom-trained regional language-focused model named Saba, which is a 24-billion parameter model. According to Mistral, the LLM has been trained on “meticulously curated datasets” from across the Middle East and South Asia.

This means that Saba can support use cases in Arabic and many Indian-origin languages, particularly South Indian-origin languages, such as Tamil the company said, adding that Saba’s support for multiple languages could increase its adoption.

Mistral claims that Saba is similar to its Mistral Small 3 model in size and this means that it is relatively cheaper to use than most LLMs.

Saba is lightweight and can be deployed on single-GPU systems, making it “more adaptable” for a variety of use cases, the company said, adding that the LLM can serve as a strong base to train highly specific regional adaptations.

The LLM’s deployment options include an API and local deployment on-premises. Mistral said the local deployment option could help more regulated industries, such as finance, banking, and healthcare, adopt the model.

In benchmark tests, such as Arabic MMLU, Arabic TyDiQAGoldP, Arabic Alghafa, and Arabic Hellaswag, Saba outperforms Mistral Small 3, Qwen 2.5 32B, Llama 3.1 70B, and G42’s Jais 70B.

Saba also outperforms LLama 3.3 70B Instruct, Cohere Command-r-08-2024 32B, Jais 70B Chat, and GPT-4o-mini in benchmarking tests, such as Arabic MMLU Instruct, Arabic MT-Bench Dev, and Arabic-Centric FLORES-101.

Why is Mistral turning its focus toward regional language LLMs?

Mistral’s focus on releasing regional language LLMs could help the company expand its overall revenue, analysts say.

“There’s a growing market for regional LLMs like Saba, especially for enterprises needing culturally and linguistically tailored solutions. The market could be significant, driven by demand for localized AI in sectors like finance, healthcare, and government, potentially reaching billions as businesses seek to enhance customer engagement and operational efficiency,” said Charlie Dai, principal analyst at Forrester.

“LLMs finetuned towards regional markets address specific linguistic, cultural, and regulatory needs, making AI solutions more relevant and effective for local enterprises. This differentiation can drive adoption and unlock revenue growth in underserved markets,” Dai explained.

In addition to regional language LLMs, Mistral said it has started training models for strategic customers who can provide deep and proprietary enterprise context.

“These (custom) models stay exclusive and private to the respective customers,” the company wrote in the blog post.

However, analysts warned that Mistral is not the only model provider trying to use the regional language model playbook for expansion.

BAAI from China open-sourced their Arabic Language Model (ALM) back in 2022. This was followed by DAMO of Alibaba Cloud open-sourcing its PolyLM in 2023 covering eleven languages including Arabic, Spanish, German, and others.

“We have been observing that language-specific LLMs have been growing in the Middle East. We saw some regional LLM launches by start-ups such as G42, which launched one of the first Arabic LLMs,” said Suseel Menon, practice director at Everest Group.

Alongside pointing out that regional public sector organizations in the Middle East have been attempting to create Arabic LLMs, such as the Saudi Data and AI Authority (SDAIA) that launched its LLM named ALLaM on IBM Cloud last year, Menon said that Saba’s presence is likely to drive more competition among model providers in the region.

Mistral also faces competition in South Asia, specifically in India where several startups have used Llama 2 to create regional language models, such as OpenHathi-Hi-v0.1 for Hindi, Tamil Llama, Telegu Llama, odia_llama2_7B_v1, and VinaLLaMA for Vietnamese.

But Dai believes that the announcement of the models is just the first step. “Model providers who offer high-quality, localized solutions will only gain loyalty and market share in underserved areas,” Dai explained, adding that regional business operations around the models are another key to success.

3 reasons to consider a data security posture management platform 18 Feb 2025, 10:00 am

A week rarely goes by without a major data security breach. Recent news includes a breach impacting an energy company’s 8 million customers, another compromising the information on 450,000 current and former students, and one more exposing 240,000 credit union members. Fines for data security breaches can be steep; for example, the Irish Data Protection Commission recently fined Meta, Facebook’s parent company, $263.5 million for a 2018 breach impacting 29 million Facebook users.

Recent research indicates the challenges in data security, with 60% of organizations reporting that at least a fifth of their data stores contain personally identifiable information (PII) or other sensitive data. Protecting this data is complex for larger organizations, with 39% of sensitive data stored in data centers, 27% on public clouds, 18% in SaaS, and 14% in edge infrastructure, while 58% of organizations report over 20% annual growth in their data.

There are many best practices and solutions to help organizations address data security risks, and the 2024 Gartner hype cycle for data security identifies over 30 to consider. One of the newer entrants is data security posture management or DSPM, a term Gartner introduced in 2022 as a proactive approach to monitor and manage data security continuously.

What is data security posture management?

DSPM aims to bring several data security practices into one management framework. Tools often include data discovery capabilities that integrate with data across clouds and classification capabilities that categorize data based on sensitivity and compliance requirements. As data is classified, DSPM platforms aid in crafting access controls, performing risk assessments, monitoring sensitive data usage, and capturing data movements. For risk and security leaders, platforms provide visibility, controls, and policy enforcement to different regulatory requirements, such as GDPR, HIPAA, California Consumer Privacy Act (CCP), or PCI data security standard (PCI-DSS).

“Data environments are only getting more complex, and regulations aren’t getting any easier to comply with,” says Amer Deeba, GVP of Proofpoint DSPM Group. “Real-time knowledge of what data you have, where it is, and how it’s being accessed is no longer optional—it’s required to report data breaches from the outset accurately. DSPM is the map that pinpoints the location of all the data that regulations care about, then overlays it with applicable rules so you can see exactly where things are out of line—whether it’s how the data is stored, accessed, or handled.”

DSPM solutions are already a big market, estimated at $94 billion in 2023 and projected to grow to $174 billion by 2031. These solutions aim to be horizontal data security platforms that discover, assess, and manage sensitive data wherever it’s stored, moved, or accessed.

Top DSPM solutions include Concentric AI, Cyera, Microsoft Purview, Securiti, Sentra, Spirion, Symmetry Systems, Theom, Varonis, and Wiz. DSPM solutions are a hot space for mergers and acquisitions—events such as Crowdstrike buying Flow Security, Formstack buying Open Raven, IBM buying Polar Security, Proofpoint buying Normalyze, Palo Alto Networks buying Dig Security, Rubrik buying Laminar, and Tenable acquiring Eureka Security.

What’s driving IT, security, and data leaders’ rising interest in DSPM platforms? Here are three big factors.

DSPM extends data compliance to dark data

“DSPM is an independent security layer, agnostic to infrastructure, that protects sensitive data and ensures consistent controls no matter where data travels,” says Yoav Regev, co-founder and CEO of Sentra. “It assesses exposure risks, identifies who has access to company data, classifies how data is used, ensures compliance with regulatory requirements like GDPR, PCI-DSS, and HIPAA, and continuously monitors data for emerging threats.”

Virtually all businesses must consider data compliance as part of their proactive data governance initiatives, which focus on business benefits and risks when establishing data-driven organizations. Data discovery used to be tedious, requiring organizations to use multiple tools to scan different data sources. Newer innovations such as machine learning prediction models, integration to multiple clouds and SaaS, and automation baked into DSPM platforms greatly reduce the complexity and improve the ability to find complex patterns and other data anomalies.

“DSPM uses machine learning and other technologies to discover, classify, and monitor an organization’s most sensitive data, then details where it lives, who has access, and how it’s used,” says Akiba Saeedi, VP of product management at IBM Security. “These insights enable organizations to shield exposed data, revoke unauthorized access, secure vulnerabilities, and remain compliant. The upshot is mitigating disastrous data breaches, costly non-compliance fines, and data leakage by LLMs.”

One of the issues facing organizations was dark data, which is data stored by organizations but not analyzed for intelligence, used in decision-making, or scanned for security and compliance risks. DSPM platforms can find this data, identify data security risks, and enable remediations.

“With DSPM, teams can set up smarter data loss prevention rules, keep insider threats in check, or clean up shadow data that shouldn’t exist in the first place. It’s about turning blind spots into a clear view of your data landscape,” adds Amer Deeba of Proofpoint DSPM Group.

DSPM safeguards data in complex and hybrid infrastructures

Point solutions that address one aspect of data security or optimize for one type of infrastructure are no longer adequate to meet the complexity of systems that store, process, and access data across multiple clouds and platforms. Furthermore, regulations require organizations to consider SaaS, which often stores sensitive information types beyond just customer data. Locking down data in selected platforms can be inefficient and complicates proving to regulators that all sensitive data meets policies regardless of where it’s stored and utilized.

“DSPM is a comprehensive approach to safeguarding sensitive data across hybrid multi-cloud, SaaS, and on-premises environments,” says Nikhil Girdhar, senior director for data security at Securiti. DSPM involves discovering all your data assets, including shadow data, classifying sensitive information, remediating risks like misconfigurations, and enforcing access controls to prevent unauthorized access. DSPM helps organizations ensure compliance with data protection laws and maintain a strong security posture by continuously monitoring and assessing data security risks.”

A platform approach to data security also ensures that data is scanned and classified consistently, even when there are multiple platforms and different types of sensitive data.

“DSPM discovers where data is residing, particularly across organizations’ many cloud apps and systems, and analyzes whether it contains sensitive customer or employee information like health records, credit card numbers, ID numbers, or if files are secret internal documents,” says Jim Fulton, VP product marketing of Forcepoint. “This helps security leaders to proactively manage their data security policies within diverse cloud and on-premises environments, streamline compliance efforts, and ultimately foster innovation in a data-driven world.”

DSPM protects data exposed to AI models

Data needs protection whether it is being stored in databases, data lakes, and file systems; in transit through data pipelines and APIs; or being incorporated and used in AI models.

“The rise of AI is fragmenting data and expanding organizational attack surfaces faster than ever, so companies must now monitor not just systems, web assets, and APIs, but also AI models and the systems those models power,” says Rob Gurzeev, CEO and co-founder of CyCognito. “By leveraging advanced monitoring and contextual analysis, organizations can uncover where vulnerabilities intersect, such as compromised credentials tied to assets with known critical exploits. This reduces false positives and dramatically improves meantime to remediation, enabling faster and more precise incident response.”

Data security platforms once focused on structured data in SQL databases and file systems, while document management solutions provided security on documents and unstructured data. Organizations looking for a holistic approach to data security rely on DSPMs to handle both structured and unstructured data sources, while some platforms, such as Concentric, extend to video and other multimedia formats.

“Having control over your data—knowing where it is, what’s in it, who has access to it, and how it’s protected—has always been important. And now, in this new age of AI, control and visibility can no longer be ignored,” says Amit Shaked, GM & VP of DSPM strategy, growth and monetization at Rubrik. “AI can make data available instantly to anyone with the right access, which is why right-sizing permissions is critical—not only for employees who shouldn’t be able to access sensitive files but also in case of a compromised identity.”

As more organizations seek faster and more scalable business value from AI, they can’t let data security become a lagging risk-management practice. DSPM platforms provide a centralized and consistent approach to discovering, classifying, and managing sensitive information.

Key strategies for MLops success in 2025 18 Feb 2025, 10:00 am

Integrating and managing artificial intelligence and machine learning effectively within business operations has become a top priority for businesses looking to stay competitive in an ever evolving landscape. However, for many organizations, harnessing the power of AI/ML in a meaningful way is still an unfulfilled dream. Hence, I thought it would be helpful to survey some of the latest MLops trends and offer some actionable takeaways for conquering common ML engineering challenges.

As you might expect, generative AI models differ significantly from traditional machine learning models in their development, deployment, and operations requirements. I’ll walk through these differences, which range from training and the delivery pipeline to monitoring, scaling, and measuring model success, and leave you with a few key questions organizations should address to guide their AI/ML strategy.

Ultimately, by focusing on solutions, not just models, and by aligning MLops with IT and devops systems, organizations can unlock the full potential of their AI initiatives and drive measurable business impacts.

The foundations of MLops

Like many things in life, in order to successfully integrate and manage AI and ML into business operations, organizations first need to have a clear understanding of the foundations. The first fundamental of MLops today is understanding the differences between generative AI models and traditional ML models.

Generative AI models differ significantly from traditional ML models in terms of data requirements, pipeline complexity, and cost. GenAI models can handle unstructured data like text and images, often requiring really complicated pipelines to process prompts, manage conversation history, and integrate private data sources. In contrast, traditional models focus on specific data and are generally optimized for specific challenges, making them simpler and more cost-effective.

Cost is another major differentiator. The calculations of generative AI models are more complex resulting in higher latency, demand for more computer power, and higher operational expenses. Traditional models, on the other hand, often utilize pre-trained architectures or lightweight training processes, making them more affordable for many organizations. When determining whether to utilize a generative AI model versus a standard model, organizations must evaluate these criteria and how they apply to their individual use cases.

Model optimization and monitoring techniques

Optimizing models for specific use cases is crucial. For traditional ML, fine-tuning pre-trained models or training from scratch are common strategies. GenAI introduces additional options, such as retrieval-augmented generation (RAG), which allows the use of private data to provide context and ultimately improve model outputs. Choosing between general-purpose and task-specific models also plays a critical role. Do you really need a general-purpose model or can you use a smaller model that is trained for your specific use case? General-purpose models are versatile but often less efficient than smaller, specialized models built for specific tasks.

Model monitoring also requires distinctly different approaches for generative AI and traditional models. Traditional models rely on well-defined metrics like accuracy, precision, and an F1 score, which are straightforward to evaluate. In contrast, generative AI models often involve metrics that are a bit more subjective, such as user engagement or relevance. Good metrics for genAI models are still lacking and it really comes down to the individual use case. Assessing a model is very complicated and can sometimes require additional support from business metrics to understand if the model is acting according to plan. In any scenario, businesses must design architectures that can be measured to make sure they deliver the desired output.

Advancements in ML engineering

Traditional machine learning has long relied on open source solutions, from open source architectures like LSTM (long short-term memory) and YOLO (you only look once), to open source libraries like XGBoost and Scikit-learn. These solutions have become the standards for most challenges thanks to being accessible and versatile. For genAI, however, commercial solutions like OpenAI’s GPT models and Google’s Gemini currently dominate due to high costs and intricate training complexities. Building these models from scratch means massive data requirements, intricate training, and significant costs.

Despite the popularity of commercial generative AI models, open-source alternatives are gaining traction. Models like Llama and Stable Diffusion are closing the performance gap, offering cost-effective solutions for organizations willing to fine-tune or train them using their specific data. However, open-source models can present licensing restrictions and integration challenges to ensuring ongoing compliance and efficiency.

Efficient scaling of ML systems

As more and more companies decide to invest in AI, there are best practices for data management and classification and architectural approaches that should be considered for scaling ML systems and ensuring high performance.

Leveraging internal data with RAG

Important questions revolve around data: What is my internal data? How can I use it? Can I train based on this data with the correct structure? One powerful strategy for scaling ML systems with genAI is retrieval-augmented generation. RAG is the ability to use internal data to change the context of a general purpose model. By embedding and querying internal data, organizations can provide context-specific answers and improve the relevance of genAI outputs. For instance, uploading product documentation to a vector database allows a model to deliver precise, context-aware responses to user queries.

Key architectural considerations

Creating scalable and efficient MLops architectures requires careful attention to components like embeddings, prompts, and vector stores. Fine-tuning models for specific languages, geographies, or use cases ensures tailored performance. An MLops architecture that supports fine-tuning is more complicated and organizations should prioritize A/B testing across various building blocks to optimize outcomes and refine their solutions.

Metrics for model success

Aligning model outcomes with business objectives is essential. Metrics like customer satisfaction and click-through rates can measure real-world impact, helping organizations understand whether their models are delivering meaningful results. Human feedback is essential for evaluating generative models and remains the best practice. Human-in-the-loop systems help fine-tune metrics, check performance, and ensure models meet business goals.

In some cases, advanced generative AI tools can assist or replace human reviewers, making the process faster and more efficient. By closing the feedback loop and connecting predictions to user actions, there is opportunity for continuous improvement and more reliable performance.

Focus on solutions, not just models

The success of MLops hinges on building holistic solutions rather than isolated models. Solution architectures should combine a variety of ML approaches, including rule-based systems, embeddings, traditional models, and generative AI, to create robust and adaptable frameworks.

Organizations should ask themselves a few key questions to guide their AI/ML strategies:

- Do we need a general-purpose solution or a specialized model?

- How will we measure success and which metrics align with our goals?

- What are the trade-offs between commercial and open-source solutions, and how do licensing and integration affect our choices?

Here is the key: You are not just building models anymore, you are building solutions. You are building architectures that include many moving parts and each one of the building blocks has the power to change the experience and the metrics that you get from a solution. As MLops continues to evolve, organizations must adapt by focusing on scalable, metrics-driven architectures. By leveraging the right combination of tools and strategies, businesses can unlock the full potential of AI and machine learning to drive innovation and deliver measurable business results.

Yuval Fernbach is the co-founder and CTO of Qwak and currently serves as VP and CTO of MLops following Qwak’s acquisition by JFrog. In his role, he pioneers a fully managed, user-friendly machine learning platform, enabling creators to reshape data, construct, train, and deploy models, and oversee the complete machine learning life cycle.

—

Generative AI Insights provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss the challenges and opportunities of generative artificial intelligence. The selection is wide-ranging, from technology deep dives to case studies to expert opinion, but also subjective, based on our judgment of which topics and treatments will best serve InfoWorld’s technically sophisticated audience. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Contact doug_dineley@foundryco.com.

Serverless was never a cure-all 18 Feb 2025, 10:00 am

An obituary for serverless computing might read something like this:

In loving memory of serverless computing, born from the fervent hopes of developers and cloud architects alike. Its life began with the promise of effortless scalability and reduced operational burdens, captivating many with the allure of “deploy and forget.” For a time, serverless thrived and was praised for its ability to manage fluctuating traffic with grace and dispatch.

However, as the years went by, the excitement faded. Serverless encountered the harsh realities of complexity and unforeseen costs. Loved ones learned that serverless alleviated some burdens but introduced others—debugging was the stuff of nightmares, and limitations suffocated creativity. Many despaired at its inability to fit every application’s needs, leading to anguished searches for reliable alternatives.

Ultimately, serverless computing succumbed to the harsh truth that it was not a universal solution but a specialized tool for niche scenarios. As we gather to reflect on its successes and failures, we must remember the lesson it taught us: Sometimes the most exciting new products can cloud our judgment. Serverless computing leaves behind a mixed legacy to remind us that no single approach reigns supreme in technology; the right tool always depends upon the problem it must solve.

Rest in peace, dear serverless. Your lessons will endure.

Let’s reflect for a moment on those who have tried serverless and emerged a bit wiser from the experience. Developers and organizations now understand the necessity of a hybrid approach, blending serverless and traditional architectures to address their diverse application needs. Yes, serverless benefits specific scenarios, such as bursty traffic and asynchronous components, but it is not a universal remedy.

What killed serverless?

Serverless architectures were originally promoted as a way for developers to rapidly deploy applications without the hassle of server management. The allure was compelling: no more server patching, automatic scalability, and the ability to focus solely on business logic while lowering costs. This promise resonated with many organizations eager to accelerate their digital transformation efforts.

Yet many organizations adopted serverless solutions without fully understanding the implications or trade-offs. It became evident that while server management may have been alleviated, developers faced numerous complexities. From database management to security vulnerabilities, the challenges of application development persisted, pushing enterprises to reconsider their cloud-based development strategies.

So, what are the realities of serverless adoption? Here are a few:

Serverless apps come with strict operational constraints. Cold start issues, time limits on function execution, and the necessity of using approved programming languages are some of the problems. Moreover, developers must learn how to handle asynchronous programming models, which complicate debugging and increase the learning curve associated with serverless.

Expenses skyrocketed for many enterprises using serverless. The pay-as-you-go model appears attractive for intermittent workloads, but it can quickly spiral out of control if an application operates under unpredictable traffic patterns or contains many small components. The requirement for scalability, while beneficial, also necessitates careful budget management—this is a challenge if teams are unprepared to closely monitor usage.

Debugging in a serverless environment poses significant hurdles. Locating the root cause of issues across multiple asynchronous components becomes more challenging than in traditional, monolithic architectures. Developers often spent the time they saved from server management struggling to troubleshoot these complex interactions, undermining the operational efficiencies serverless was meant to provide.

Smart strategies for cloud development

Serverless may still have a place in enterprise cloud strategy, but it should be integrated into the broader toolkit of application development methodologies.

Serverless computing may remain helpful in specific scenarios. Applications with sporadic traffic and isolated functions that can be independently tested may still be good candidates for serverless. However, traditional methods may offer better reliability and cost-effectiveness for applications with consistent loads or more predictable patterns.

Enterprises looking for predictability should opt for traditional architectures. This allows more intimate management of the environment and costs. Monolithic and containerized solutions may provide a more straightforward path to better control expenses and simplify troubleshooting.

A hybrid cloud strategy can enhance responsiveness and innovation. Organizations can mix serverless, containerized, and traditional architectures, tailoring their approach to the specific requirements of various applications. This can safeguard against reliance on any single paradigm.

Developer training is essential in a mixed methodology. Teams need to be skilled in both traditional and serverless paradigms to successfully navigate the complexities of modern application development.

Nice try, cloud providers

Today, serverless has proven to be a risky and often costly investment that does not suit the needs of most businesses. While it can effectively address specific scenarios, such as asynchronous applications with unpredictable traffic, most enterprises find that traditional architectures offer greater predictability and control. The myth that serverless eliminates all burdens has been dispelled as teams are left to manage complexities similar to conventional setups.

In the current cloud climate, it’s best to focus on a hybrid approach. Leverage serverless computing where it makes sense, but rely on more traditional methods to harness the strengths of both strategies. It’s time to admit that serverless didn’t live up to its hype and make choices that align with specific business needs. Sorry, cloud providers, but it’s time to leave this one behind.

Large language models: The foundations of generative AI 17 Feb 2025, 11:46 am

Large language models (LLMs) such as GPT, Bard, and Llama have caught the public’s imagination and garnered a wide variety of reactions. They are also expected to grow dramatically in the coming years. According to Dimension Market Research, The Global LLM market is expected to reach $140.8 billion by 2033 at a CAGR of 40.7%.

This article looks behind the hype to help you understand the origins of large language models, how they’re built and trained, and the range of tasks they are specialized for. We’ll also look at the most popular LLMs in use today.

What is a large language model?

Language models go back to the early 20th century, but large language models (LLMs) emerged with a vengeance after neural networks were introduced. The Transformer deep neural network architecture, introduced in 2017, was particularly instrumental in the evolution from language models to LLMs.

Large language models are useful for a variety of tasks, including text generation from a descriptive prompt, code generation and code completion, text summarization, translating between languages, and text-to-speech and speech-to-text applications.

LLMs also have drawbacks, at least in their current developmental stage. Generated text is usually mediocre, and sometimes downright bad. LLMs are known to invent facts, called hallucinations, which might seem reasonable if you don’t know better. Language translations are rarely 100% accurate unless they’ve been vetted by a native speaker, which is usually only done for common phrases. Generated code often has bugs, and sometimes has no hope of running. While LLMs are usually fine-tuned to avoid making controversial statements or recommending illegal acts, it is possible to breach these guardrails using malicious prompts.

Training large language models requires at least one large corpus of text. Training examples include the 1B Word Benchmark, Wikipedia, the Toronto Books Corpus, the Common Crawl dataset, and public open source GitHub repositories. Two potential problems with large text datasets are copyright infringement and garbage. Copyright infringement is currently the subject of multiple lawsuits. Garbage, at least, can be cleaned up; an example of a cleaned dataset is the Colossal Clean Crawled Corpus (C4), an 800GB dataset based on the Common Crawl dataset.

The role of parameters in LLMs

Large language models are different from traditional language models in that they use a deep learning neural network, a large training corpus, and they require millions or more parameters or weights for the neural network.

Along with at least one large training corpus, LLMs require large numbers of parameters, also known as weights. The number of parameters grew over the years, until it didn’t. ELMo (2018) has 93.6 million parameters; BERT (2018) was released in 100-million and 340-million parameter sizes; GPT (2018) uses 117 million parameters; and T5 (2020) has 220 million parameters. GPT-2 (2019) has 1.6 billion parameters; GPT-3 (2020) uses 175 billion parameters; and PaLM (2022) has 540 billion parameters. GPT-4 (2023) has 1.76 trillion parameters.

In simpler terms: Imagine an LLM as a vast network of interconnected switches. Each switch has a setting (the parameter) that determines how it responds to input. During training, these switches are adjusted to optimize the network’s overall performance in understanding and generating language.

More parameters make a model more accurate, but models with higher parameters also require more memory and run more slowly. In 2023, we’ve started to see some relatively smaller models released at multiple sizes: for example, Llama 2 comes in sizes of 7 billion, 13 billion, and 70 billion, while Claude 2 has 93-billion and 137-billion parameter sizes.

However, it’s not just about the number of parameters. Other factors also play a crucial role:

- The quality of the training data: Even a model with many parameters will perform poorly if it’s trained on biased or low-quality data.

- The architecture of the model: The way the parameters are organized and connected also affects the model’s capabilities.

- The training process itself: Effective training techniques are essential for optimizing the parameters.

While parameters are essential to LLMs, they are the learned knowledge that allows the models to understand and generate human-like text. The number of parameters is important, but it’s just one that contribute to an LLM’s overall performance.

A history of AI models for text generation

Language models go back to Andrey Markov, who applied mathematics to poetry in 1913. Markov showed that in Pushkin’s Eugene Onegin, the probability of a character appearing depended on the previous character, and that, in general, consonants and vowels tended to alternate. Today, Markov chains are used to describe a sequence of events in which the probability of each event depends on the state of the previous one.

Markov’s work was extended by Claude Shannon in 1948 for communications theory, and again by Fred Jelinek and Robert Mercer of IBM in 1985 to produce a language model based on cross-validation (which they called deleted estimates), and applied to real-time large-vocabulary speech recognition. Essentially, a statistical language model assigns probabilities to sequences of words.

To quickly see a language model in action, just type a few words into Google Search, or a text message app on your phone, with auto-completion turned on.

In 2000, Yoshua Bengio and co-authors published a paper detailing a neural probabilistic language model in which neural networks replace the probabilities in a statistical language model, bypassing the curse of dimensionality and improving word predictions over a smoothed trigram model (then the state of the art) by 20% to 35%. The idea of feed-forward auto-regressive neural network models of language is still used today, although the models now have billions of parameters and are trained on extensive corpora; hence the term “large language model.”

Language models have continued to get bigger over time, with the goal of improving performance. But such growth has downsides. The 2021 paper, On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?, questions whether we are going too far with the larger-is-better trend. The authors suggest weighing the environmental and financial costs first and investing resources into curating and documenting datasets rather than ingesting everything on the web.

Language models and LLMs explained

Current language models have a variety of tasks and goals and take various forms. For example, in addition to the task of predicting the next word in a document, language models can generate original text, classify text, answer questions, analyze sentiment, recognize named entities, recognize speech, recognize text in images, and recognize handwriting. Customizing language models for specific tasks, typically using small to medium-sized supplemental training sets, is called fine-tuning.

Some of the intermediate tasks that go into language models are the following:

- Segmentation (of the training corpus into sentences): LLMs are trained on vast amounts of text, which needs to be broken down into individual sentences for the model to learn the structure and relationships between words within sentences. Segmentation is the process of identifying sentence boundaries (e.g., using punctuation).

- Word tokenization: Tokenization breaks down the text into individual units (tokens), which can be words, sub-word units (like parts of words), or punctuation marks. This is a crucial first step before feeding text to an LLM.

- Stemming: Reduces words to their root form (e.g., “running” to “run”). While historically important in NLP, stemming is less crucial for modern LLMs because they often handle morphological variations implicitly through their training. LLMs are often trained on raw text or use sophisticated tokenization that handles these variations.

- Lemmatizing (conversion to the root word): Similar to stemming, but more sophisticated. Lemmatization uses dictionaries and grammatical rules to find the base or dictionary form of a word (e.g., “running” to “run”). Like stemming, it’s less critical for LLMs as they are good at dealing with different forms of the same word.

- (Part of speech) tagging: Identifying the grammatical role of each word in a sentence (e.g., noun, verb, adjective). While LLMs can often infer POS tags implicitly, having explicit POS tags as input can sometimes be useful for specific tasks or fine-tuning.

- Stopword Identification and (possibly) Removal: Stopwords are common words (e.g., “the,” “a,” “is”) that are often removed in traditional NLP tasks to reduce noise. For LLMs, removing stopwords is often not beneficial, as these words contribute to the meaning and structure of sentences. LLMs generally benefit from having the full context.

- Named-entity recognition (NER): Identifying and classifying named entities in text (e.g., people, organizations, locations). LLMs are very good at NER and can be used to extract this information from text. This can be a task LLMs are fine-tuned for, or even performed through prompt engineering.

- Text classification: Assigning categories or labels to text documents. LLMs can be used for text classification tasks. You can fine-tune an LLM to classify text, or use prompt engineering to guide the model towards the desired categories.

- Chunking (breaking sentences into meaningful phrases): Grouping words into phrases (e.g., noun phrases, verb phrases). While LLMs may not explicitly use chunking as a preprocessing step, they implicitly understand phrase structure and can be prompted to extract phrases.

- Coreference resolution (finding all expressions that refer to the same entity in a text): Identifying all mentions of the same entity in a text, even if they are referred to using different words or pronouns (e.g., “John,” “he,” “the CEO”). LLMs are capable of performing coreference resolution, and this is a task that can be used to improve the LLM’s understanding of relationships between entities in a text.

Several of these are also useful as tasks or applications in and of themselves, such as text classification.

Large language models are different from traditional language models in that they use a deep learning neural network and a large training corpus, and they require millions or more parameters or weights for the neural network. Training an LLM is a matter of optimizing the weights so that the model has the lowest possible error rate for its designated task. An example task would be predicting the next word at any point in the corpus, typically in a self-supervised fashion.

A look at the most popular LLMs

The recent explosion of large language models was triggered by the 2017 paper, Attention is All You Need, which introduced the Transformer as, “a new simple network architecture … based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.”

Here are some of the top large language models in use today.

ELMo

ELMo is a 2018 deep contextualized word representation LLM from AllenNLP that models both complex characteristics of word use and how that use varies across linguistic contexts. The original model has 93.6 million parameters and was trained on the 1B Word Benchmark.

BERT

BERT is a 2018 language model from Google AI based on the company’s Transformer neural network architecture. BERT was designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. The two model sizes initially used were 100 million and 340 million total parameters. The LLM uses masked language modeling (MLM), in which ~15% of tokens are “corrupted” for training. It was trained on English Wikipedia plus the Toronto Books Corpus.

Gemini

Google’s Gemini, based on its Bard technology, offers multimodal capabilities and focus on efficiency (with versions like Nano for on-device processing), Gemini is likely to be a leader in 2025. We can anticipate further enhancements in its ability to understand and generate diverse content, including code and images.

T5

The 2020 Text-To-Text Transfer Transformer (T5) model from Google synthesizes a new model based on the best transfer learning techniques from GPT, ULMFiT, ELMo, BERT, and their successors. It uses the open source Colossal Clean Crawled Corpus (C4) as a pre-training dataset. The standard C4 for English is an 800GB dataset based on the original Common Crawl dataset. T5 reframes all NLP tasks into a unified text-to-text-format where the input and output are always text strings, in contrast to BERT-style models that can only output either a class label or a span of the input. The base T5 model has about 220 million total parameters.

GPT family

OpenAI, an AI research and deployment company, has a mission “to ensure that artificial general intelligence (AGI) benefits all of humanity.” Of course, it hasn’t achieved AGI yet—and some AI researchers, such as machine learning pioneer Yann LeCun of Meta-FAIR, think that OpenAI’s current approach to AGI is a dead end.

OpenAI is responsible for the GPT family of language models. Here’s a quick look at the entire GPT family and its evolution since 2018. (Note that the entire GPT family is based on Google’s Transformer neural network architecture, which is legitimate because Google open-sourced Transformer.)

GPT (Generative Pretrained Transformer) is a 2018 model from OpenAI that uses about 117 million parameters. GPT is a unidirectional transformer pre-trained on the Toronto Book Corpus, and was trained with a causal language modeling (CLM) objective, meaning that it was trained to predict the next token in a sequence.

GPT-2 is a 2019 direct scale-up of GPT with 1.5 billion parameters, trained on a dataset of 8 million web pages encompassing ~40GB of text data. OpenAI originally restricted access to GPT-2 because it was “too good” and would lead to “fake news.” The company eventually relented, although the potential social problems became even worse with the release of GPT-3.

GPT-3 is a 2020 autoregressive language model with 175 billion parameters, trained on a combination of a filtered version of Common Crawl, WebText2, Books1, Books2, and English Wikipedia. The neural net used in GPT-3 is similar to that of GPT-2, with a couple of additional blocks.

The biggest downside of GPT-3 is that it tends to “hallucinate,” meaning that it makes up facts with no discernable basis. GPT-3.5 and GPT-4 have the same problem, albeit to a lesser extent.

GPT-3.5 is a set of 2022 updates to GPT-3 and CODEX. The gpt-3.5-turbo model is optimized for chat but also works well for traditional completion tasks.

GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that OpenAI claims exhibits human-level performance on some professional and academic benchmarks. GPT-4 outperformed GPT-3.5 in various simulated exams, including the Uniform Bar Exam, the LSAT, the GRE, and several AP subject exams.

Note that GPT-3.5 and GPT-4 performance has changed over time. A July 2023 Stanford paper identified several tasks, including prime number identification, where the behavior varied greatly between March 2023 and June 2023.

The latest iterations (likely beyond GPT-4 by 2025) are expected to have even greater capabilities in text generation, reasoning, and multimodal understanding (handling images, audio, etc.). Expect improvements in handling longer contexts and reducing hallucinations

ChatGPT and BingGPT are chatbots that were originally based on gpt-3.5-turbo and in March 2023 upgraded to use GPT-4. Currently, to access the version of ChatGPT based on GPT-4, you need to subscribe to ChatGPT Plus. The standard ChatGPT, based on GPT-3.5, was trained on data that cut off in September 2021.

The latest iterations (likely beyond GPT-4 in 2025) are expected to have greater capabilities in text generation, reasoning, and multimodal understanding (handling images, audio, etc.). Expect improvements in handling longer contexts and reducing hallucinations

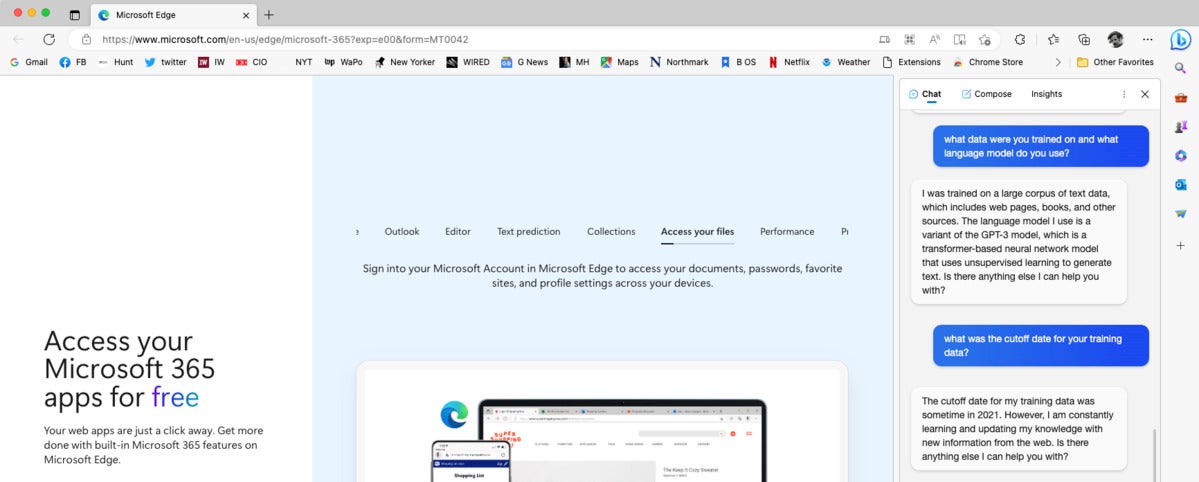

BingGPT, aka “The New Bing,” which you can access in the Microsoft Edge browser, was also trained on data that cut off in 2021. When asked, the bot claims that it is constantly learning and updating its knowledge with new information from the web.

BingGPT explains its language model and training data, as seen in the text window at the right of the screen.

In early March 2023, Professor Pascale Fung of the Centre for Artificial Intelligence Research at the Hong Kong University of Science & Technology gave a talk on ChatGPT evaluation. It’s well worth the hour to watch it.

LaMDA

LaMDA (Language Model for Dialogue Applications), Google’s 2021 “breakthrough” conversation technology, is a Transformer-based language model trained on dialogue and fine-tuned to significantly improve the sensibleness and specificity of its responses. One of LaMDA’s strengths is that it can handle the topic drift that is common in human conversations. While you can’t directly access LaMDA, its impact on the development of conversational AI is undeniable as it pushed the boundaries of what’s possible with language models and paved the way for more sophisticated and human-like AI interactions.

PaLM

PaLM (Pathways Language Model) is a dense decoder-only Transformer model from Google Research with 540 billion parameters, trained with the Pathways system. PaLM was trained using a combination of English and multilingual datasets that include high-quality web documents, books, Wikipedia, conversations, and GitHub code. Google also created a “lossless” vocabulary that preserves all whitespace (especially important for code), splits out-of-vocabulary Unicode characters into bytes, and splits numbers into individual tokens, one for each digit.

Google has made PaLM 2 accessible through the PaLM API and MakerSuite. This means developers can now use PaLM 2 to build their own generative AI applications.

PaLM-Coder is a version of PaLM 540B fine-tuned on a Python-only code dataset.

PaLM-E

PaLM-E is a 2023 embodied (for robotics) multimodal language model from Google. The researchers began with PaLM and “embodied” it (the E in PaLM-E), by complementing it with sensor data from the robotic agent. PaLM-E is also a generally-capable vision-and-language model; in addition to PaLM, it incorporates the ViT-22B vision model.

Bard has been updated multiple times since its release. In April 2023 it gained the ability to generate code in 20 programming languages. In July 2023 it gained support for input in 40 human languages, incorporated Google Lens, and added text-to-speech capabilities in over 40 human languages.

LLaMA

LLaMA (Large Language Model Meta AI) is a 65-billion parameter “raw” large language model released by Meta AI (formerly known as Meta-FAIR) in February 2023. According to Meta:

Training smaller foundation models like LLaMA is desirable in the large language model space because it requires far less computing power and resources to test new approaches, validate others’ work, and explore new use cases. Foundation models train on a large set of unlabeled data, which makes them ideal for fine-tuning for a variety of tasks.

LLaMA was released at several sizes, along with a model card that details how it was built. Originally, you had to request the checkpoints and tokenizer, but they are in the wild now: a downloadable torrent was posted on 4chan by someone who properly obtained the models by filing a request, according to Yann LeCun of Meta AI.

Llama

Llama 2 is the next generation of Meta AI’s large language model, trained between January and July 2023 on 40% more data (2 trillion tokens from publicly available sources) than LLaMA 1 and having double the context length (4096). Llama 2 comes in a range of parameter sizes—7 billion, 13 billion, and 70 billion—as well as pretrained and fine-tuned variations. Meta AI calls Llama 2 open source, but there are some who disagree, given that it includes restrictions on acceptable use. A commercial license is available in addition to a community license.

Llama 2 is an auto-regressive language model that uses an optimized Transformer architecture. The tuned versions use supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align to human preferences for helpfulness and safety. Llama 2 is currently English-only. The model card includes benchmark results and carbon footprint stats. The research paper, Llama 2: Open Foundation and Fine-Tuned Chat Models, offers additional detail.

Claude

Claude 3.5 is the current leading version.

Anthropic’s Claude 2, released in July 2023, accepts up to 100,000 tokens (about 70,000 words) in a single prompt, and can generate stories up to a few thousand tokens. Claude can edit, rewrite, summarize, classify, extract structured data, do Q&A based on the content, and more. It has the most training in English, but also performs well in a range of other common languages, and still has some ability to communicate in less common ones. Claude also has extensive knowledge of programming languages.

Claude was constitutionally trained to be Helpful, Honest, and Harmless (HHH), and extensively red-teamed to be more harmless and harder to prompt to produce offensive or dangerous output. It doesn’t train on your data or consult the internet for answers, although you can provide Claude with text from the internet and ask it to perform tasks with that content. Claude is available to users in the US and UK as a free beta, and has been adopted by commercial partners such as Jasper (a generative AI platform), Sourcegraph Cody (a code AI platform), and Amazon Bedrock.

Conclusion

As we’ve seen, large language models are under active development at several companies, with new versions shipping more or less monthly from OpenAI, Google AI, Meta AI, and Anthropic. While none of these LLMs achieve true artificial general intelligence (AGI), new models mostly tend to improve over older ones. Still, most LLMs are prone to hallucinations and other ways of going off the rails, and may in some instances produce inaccurate, biased, or other objectionable responses to user prompts. In other words, you should use them only if you can verify that their output is correct.

3 key features of Postman’s AI Agent Builder 17 Feb 2025, 10:00 am

The software landscape is shifting from passive business processes to dynamic, AI-driven workflows. AI agents—systems that interact with APIs, make decisions, and execute complex tasks—are at the forefront of this transformation. While large language models (LLMs) laid the groundwork, agentic AI is redefining how businesses automate operations. However, building these agents is a challenge, requiring developers to integrate multiple tools, testing frameworks, and APIs.

Recognizing this shift, Postman has introduced AI Agent Builder, a suite of tools designed to simplify the creation, testing, and deployment of AI agents. This new offering aims to democratize agent development, allowing teams to focus on designing intelligent workflows rather than wrestling with technical overhead.

This article explores three key AI-driven capabilities within Postman that enhance API development and testing. These features help developers

- Evaluate and compare LLMs based on performance and cost.

- Orchestrate intelligent automations as agentic workflows.

- Discover and integrate relevant APIs as agent tools, without coding complexity.

We’ll examine each capability in more detail and discuss how they facilitate AI-driven development for teams looking to harness AI in for intelligent business processes.

AI protocol: Extending Postman’s API testing to AI models

Postman’s new AI Protocol extends its existing API testing platform to handle AI model interactions. By treating large language models like powerful APIs, development teams can systematically test both system and user prompts, configure model properties for desired creativity or predictability, and benchmark performance based on response time, accuracy, and cost. Collections of prompts act as versioned assets, allowing teams to track prompt changes over time, refine parameters, and maintain consistent test suites as new models or updated versions are released.

Postman

The recent debut of DeepSeek R1 illustrates how quickly organizations scramble to test newly available foundation models for potential performance gains or cost savings. Rather than setting up parallel machine learning pipelines or adopting additional tools, teams can leverage Postman’s existing interface, environment variables, and versioning features to integrate model testing immediately. This approach helps prevent fragmentation across multiple LLM providers by enabling side-by-side comparisons and centralized metrics. Shortly after DeepSeek R1’s release, Postman customers were already evaluating their current prompt collections against both R1 and OpenAI’s o1 model to determine which option delivered the best results for their specific use cases.

Postman

Agent Builder: Creating agentic workflows with a visual low-code tool

Postman’s Agent Builder uses the platform’s Flows visual programming interface to create multi-step workflows that integrate both API requests and AI interactions—no extensive coding required. With full integration of the new Postman AI protocol, developers can embed LLMs into their automation sequences to enable dynamic, adaptive, and intelligence-driven processes. For example, AI requests can enrich workflows with real-time data, make context-aware decisions, and discover relevant tools to address business needs. Flows also includes low-code building blocks for conditional logic, scripting capabilities for custom scenarios, and built-in data visualization and reporting, enabling teams to quickly tailor workflows to specific business requirements, reduce development overhead, and deliver actionable insights faster.

Postman

This Agent Builder approach supports rapid experimentation, local testing, and debugging, effectively fitting into a developer’s “inner loop.” Collaboration features allow teams to label and section workflows, making it easier to share and explain complex automations with colleagues or stakeholders. For multi-service workflows, developers can confirm each step under realistic conditions using scenarios to ensure consistency and reliability well before final deployment. Scenarios can be versioned and shared, streamlining the process of testing and evaluating agents built with Flows.

Postman

API Discovery and Tool Generation: Easy access to verified APIs

Postman’s API Discovery and Tool Generation capabilities add the ability to find and integrate the right APIs to use with AI agents. By leveraging Postman’s network of more than 100,000 public APIs, developers can automatically generate “agent tools,” removing the need to manually write wrappers or boilerplate code for those APIs. This scaffolding step includes specifying which agent framework (e.g., Node.js, Python, Java) and which target LLM service or library the agent will use, even if official SDKs don’t exist yet. As a result, teams can focus on core workflow logic rather than wrestling with setup details.

Postman

Moreover, verified partner APIs in the catalog help ensure agents are configured accurately for critical business tasks. Instead of researching and integrating each API from scratch, developers can rely on the Postman network to surface endpoints, request payloads, and authentication specifications suited to specific AI-driven use cases. By consolidating discovery, documentation, and testing in one place, teams can filter through a vast API collection, preview endpoints, run sample requests directly in their browser or the Postman client, and then generate ready-to-run code. This results in faster onboarding, more reliable integrations, and a broader range of capabilities for AI-powered applications. Without these built-in safeguards and automation, developers would need to manually verify each API’s reliability, usage patterns, and code compatibility—an error-prone and time-consuming process.

A unified approach to AI-driven automation

By combining AI model testing, low-code agent building, and tool discovery in one platform, Postman helps developers standardize how AI workflows and traditional APIs intersect. Teams can build on familiar API practices—such as versioning, environment variables, and collaboration—while extending them to AI-powered services. This unified approach fosters consistent testing, quality standards, and data management across both conventional APIs and AI-driven workflows.

For organizations looking to operationalize AI, these capabilities provide a smooth pathway from prompt engineering and multi-LLM evaluation to production-grade intelligent automation, without juggling multiple platforms, integrations, or tools.

For deeper technical details and documentation, visit the official Postman AI Agent Builder documentation. Whether you’re a newcomer experimenting with LLMs or a seasoned pro looking for enterprise-grade testing and integration, Postman’s latest features aim to simplify and unify your AI development workflow.

Rodric Rabbah is the head of product for Flows at Postman. An accomplished entrepreneur and technologist, Rabbah founded Nimbella, a serverless cloud company that was successfully acquired by DigitalOcean, where he led the launch of DigitalOcean Functions. He is the main creator and developer behind Apache OpenWhisk, the open-source platform for serverless computing. He created OpenWhisk while at IBM Research, where he also led the development and operations of IBM Cloud Functions.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send inquiries to doug_dineley@foundryco.com.

How to keep AI hallucinations out of your code 17 Feb 2025, 10:00 am

It turns out androids do dream, and their dreams are often strange. In the early days of generative AI, we got human hands with eight fingers and recipes for making pizza sauce from glue. Now, developers working with AI-assisted coding tools are also finding AI hallucinations in their code.

“AI hallucinations in coding tools occur due to the probabilistic nature of AI models, which generate outputs based on statistical likelihoods rather than deterministic logic,” explains Mithilesh Ramaswamy, a senior engineer at Microsoft. And just like that glue pizza recipe, sometimes these hallucinations escape containment.

AI coding assistants are increasingly omnipresent, and usage is growing, with 62% of respondents saying they were using AI coding tools in the May 2024 Stack Overflow developer survey. So how can you prevent AI hallucinations from ruining your code? We asked developers and tech leaders experienced with using AI coding assistants for their tips.

How AI hallucinations infect code

Microsoft’s Ramaswamy, who works every day with AI tools, keeps a list of the sorts of AI hallucinations he encounters: “Generated code that doesn’t compile; code that is overly convoluted or inefficient; and functions or algorithms that contradict themselves or produce ambiguous behavior.” Additionally, he says, “AI hallucinations sometimes just make up nonexistent functions” and “generated code may reference documentation, but the described behavior doesn’t match what the code does.”

Komninos Chatzipapas, founder of HeraHaven.ai, gives an example of a specific problem of this type. “On our JavaScript back-end, we had a function to deduct credit from a user based on their ID,” he says. “The function expected an object containing an ID value as its parameter, but the coding assistant just put the ID as the parameter.” He notes that in loosely typed languages like JavaScript, problems like these are more likely to slip past language parsers. The error Chatzipapas encountered “crashed our staging environment, but was fortunately caught before pushed to production.”

How does code like this slip into production? Monojit Banerjee, a lead in the AI platform organization at Salesforce, describes the code output by many AI assistants as “plausible but incorrect or non-functional.” Brett Smith, distinguished software developer at SAS, notes that less experienced developers are especially likely to be misled by the AI tool’s confidence, “leading to flawed code.”

The consequences of flawed AI code can be significant. Security holes and compliance issues are top of mind for many software companies, but some issues are less immediately obvious. Faulty AI-generated code adds to overall technical debt, and it can detract from the efficiency code assistants are intended to boost. “Hallucinated code often leads to inefficient designs or hacks that require rework, increasing long-term maintenance costs,” says Microsoft’s Ramaswamy.

Fortunately, the developers we spoke with had plenty of advice about how to ensure AI-generated code is correct and secure. There were two categories of tips: how to minimize the chance of code hallucinations, and how to catch hallucinations after the fact.

Reducing AI hallucinations in your code

The ideal would of course be to never encounter AI hallucinations at all. While that’s unlikely (not with the current state of the art), the following precautions can help reduce issues in AI-generated code.

Write clear and detailed prompts

The adage “garbage in, garbage out” is as old as computer science—and it applies to LLMs, as well, especially when you’re generating code by prompting rather than using an autocomplete assistant. Many of the experts we spoke to urged developers to get their prompt engineering game on point. “It’s best to ask bounded questions and critically examine the results,” says Andrew Sellers, head of technology strategy at Confluent. “Usage data from these tools suggest that outputs tend to be more accurate for questions with a smaller scope, and most developers will be better at catching errors by frequently examining small blocks of code.”

Ask for references

LLMs like ChatGPT are notorious for making up citations in school papers and legal briefs. But code-specific tools have made great strides in that area. “Many models are supporting citation features,” says Salesforce’s Banerjee. “A developer should ask for citations or API reference wherever possible to minimize hallucinations.”

Make sure your AI tool has trained on the latest software

Most genAI chatbots can’t tell you who won your home team’s baseball game last night, and they have limitations keeping up with software tools and updates as well. “One of the ways you can predict whether a tool will hallucinate or provide biased outputs is by checking its knowledge cut-offs,” says Stoyan Mitov, CEO of Dreamix and co-founder of the Citizens app. “If you plan on using the latest libraries or frameworks that the tool doesn’t know about, the chances that the output will be flawed are high.”

Train your model to do things your way

Travis Rehl, CTO at Innovative Solutions, says what generative AI tools need to work well is “context, context, context.” You need to provide good examples of what you want and how you want it done, he says. “You should tell the LLM to maintain a certain pattern, or remind it to use a consistent method so it doesn’t create something new or different.” If you fail to do so, you can run into a subtle type of hallucination that injects anti-patterns into your code. “Maybe you always make an API call a particular way, but the LLM chooses a different method,” he says. “While technically correct, it did not follow your pattern and thus deviated from what the norm needs to be.”

A concept that takes this idea to its logical conclusion is retrieval augmented generation, or RAG, in which the model uses one or more designated “sources of truth” that contain code either specific to the user or at least vetted by them. “Grounding compares the AI’s output to reliable data sources, reducing the likelihood of generating false information,” says Mitov. RAG is “one of the most effective grounding methods,” he says. “It improves LLM outputs by utilizing data from external sources, internal codebases, or API references in real time.”

Many available coding assistants already integrate RAG features—the one in Cursor is called @codebase, for instance. If you want to create your own internal codebase for an LLM to draw from, you would need to store it in a vector database; Banerjee points to Chroma as one of the most popular options.

Catching AI hallucinations in your code

Even with all of these protective measures, AI coding assistants will sometimes make mistakes. The good news is that hallucinations are often easier to catch in code than in applications where the LLM is writing plain text. The difference is that code is executable and can be tested. “Coding is not subjective,” as Innovative Solutions’ Rehl points out. “Code simply won’t work when it’s wrong.” Experts offered a few ways to spot mistakes in generated code.

Use AI to evaluate AI-generated code

Believe it or not, AI assistants can evaluate AI-generated code for hallucinations—often to good effect. For instance, Daniel Lynch, CEO of Empathy First Media, suggests “writing supporting documentation on the code so that you can have the AI evaluate the provided code in a new instance and determine if it satisfies the requirements of the intended use case.”

HeraHaven’s Chatzipapas suggests that AI tools can do far more in judging output from other tools. “Scaling test-time compute deals with the issue where, for the same input, an LLM can generate a variety of responses, all with different levels of quality,” he explains. “There are many ways to make it work but the simplest one is to query the LLM multiple times and then use a smaller ‘verifier’ AI model to pick which answer is better to present to the end user. There are also more sophisticated ways where you can cluster the different answers you get and pick one from the largest cluster (since that one has received more implied ‘votes’).”

Maintain human involvement and expertise

Even with machine assistance, most people we spoke to saw human beings as the last line of defense against AI hallucination. Most saw human involvement remaining crucial to the coding process for the foreseeable future. ” Always use AI as a guide, not a source of truth,” says Microsoft’s Ramaswamy. “Treat AI-generated code as a suggestion, not a replacement for human expertise.”

That expertise shouldn’t just be around programming generally; you should stay intimately acquainted with the code that powers your applications. “It can sometimes be hard to spot a hallucination if you’re unfamiliar with a codebase,” says Rehl. Having hands-on experience in the codebase is critical to spotting deviations in specific methods or the overall code pattern, for example.

Test and review your code

Fortunately, the tools and techniques most well-run shops use to catch human errors, from IDE tools to unit tests, can also catch AI hallucinations. “Teams should continue doing pull requests and code reviews just as if the code were written by humans,” says Confluent’s Sellers. “It’s tempting for developers to use these tools to automate more in achieving continuous delivery. While laudable, it’s incredibly important for developers to prioritize QA controls when increasing automation.”

“I cannot stress enough the need to use good linting tools and SAST scanners throughout the development cycle,” says SAS’s Smith. “IDE plugins, integration into the CI, and pull requests are the bare minimum to ensure hallucinations do not make it to production.”

“A mature devops pipeline is essential, where each line of code will be unit tested during the development lifecycle,” adds Salesforce’s Banerjee. “The pipeline will only promote the code to staging and production after tests and builds are passed. Moreover, continuous deployment is essential to roll back code as soon as possible to avoid a long tail of any outage.”

Highlight AI-generated code

Devansh Agarwal, a machine learning engineer at Amazon Web Services, recommends a technique that he calls “a little experiment of mine”: Use the code review UI to call out parts of the codebase that are AI-generated. “I often see hundreds of lines of unit test code being approved without any comments from the reviewer,” he says, “and these unit tests are one of the use cases where I and others often use AI. Once you mark that these are AI-generated, then people take more time in reviewing them.”

This doesn’t just help catch hallucinations, he says. “It’s a great learning opportunity for everyone in the team. Sometimes it does an amazing job and we as humans want to replicate it!”

Keep the developer in the driver’s seat

Generative AI is ultimately a tool, nothing more and nothing less. Like all other tools, it has quirks. While using AI changes some aspects of programming and makes individual programmers more productive, its tendency to hallucinate means that human developers must remain in the driver’s seat for the foreseeable future. “I’m finding that coding will slowly become a QA- and product definition-heavy job,” says Rehl. As a developer, “your goal will be to understand patterns, understand testing methods, and be able to articulate the business goal you want the code to achieve.”

What if generative AI can’t get it right? 17 Feb 2025, 10:00 am

Large language models (LLMs) keep getting faster and more capable. That doesn’t mean they’re correct. This is arguably the biggest shortcoming of generative AI: It can be incredibly fast while simultaneously being incredibly wrong. This may not be an issue in areas like marketing or software development, where tests and reviews can find and fix errors. However, as analyst Benedict Evans points out, “There is also a broad class of task that we would like to be able to automate, that’s boring and time-consuming and can’t be done by traditional software, where the quality of the result is not a percentage, but a binary.” In other words, he says, “For some tasks, the answer is not better or worse: It’s right or not right.”

Until generative AI can give us facts and not probabilities, it’s simply not going to be good enough for a wide swath of use cases, no matter how much the next DeepSeek speeds up its calculations.

Fact-checking AI

In January DeepSeek seemingly changed everything in AI. Mind-blowing speed at dramatically lower costs. As Lucas Mearian writes, DeepSeek sent “shock waves” through the AI community, but its impact likely won’t last. Soon there will be something faster and cheaper. But will there be something that provides what we most need? That is, more accuracy and truth? We can’t solve that problem by making AI more open. It’s deeper than that.

“Every week there’s a better AI model that gives better answers,” Evans notes. “But a lot of questions don’t have better answers, only right answers, and these models can’t do that.” This isn’t to say performance and cost improvements aren’t needed. DeepSeek, for example, makes genAI models more affordable for enterprises that want to build them into applications. And, as investor Martin Casado and former Microsoft executive Steven Sinofsky suggest, the application layer, not infrastructure, is the most interesting and important area for genAI development.

The problem, however, is that many applications depend on right-or-wrong answers, not “probabilistic … outputs based on patterns they have observed in the training data,” as I’ve covered before. As Evans expresses it, “There are some tasks where a better model produces better, more accurate results, but other tasks where there’s no such thing as a better result and no such thing as more accurate, only right or wrong.”

In the absence of the ability to speak truth rather than probabilities, the models may be worse than useless for many tasks. The problem is that these models can be exceptionally confident and wrong at the same time. It’s worth quoting an Evans example at length. In trying to find the number of elevator operators in the United States in 1980 (a number clearly identified in a U.S. Census report), he gets a range of answers:

First, I try [the question] cold, and I get an answer that’s specific, unsourced, and wrong. Then I try helping it with the primary source, and I get a different wrong answer with a list of sources, that are indeed the U.S. Census, and the first link goes to the correct PDF… but the number is still wrong. Hmm. Let’s try giving it the actual PDF? Nope. Explaining exactly where in the PDF to look? Nope. Asking it to browse the web? Nope, nope, nope…. I don’t need an answer that’s perhaps more likely to be right, especially if I can’t tell. I need an answer that is right.

Just wrong enough

But what about questions that don’t require a single right answer? For the particular purpose Evans was trying to use genAI, the system will always be just enough wrong to never give the right answer. Maybe, just maybe, better models will fix this over time and become consistently correct in their output. Maybe.

The more interesting question Evans poses is whether there are “places where [generative AI’s] error rate is a feature, not a bug.” It’s hard to think of how being wrong could be an asset, but as an industry (and as humans) we tend to be really bad at predicting the future. Today we’re trying to retrofit genAI’s non-deterministic approach to deterministic systems, and we’re getting hallucinating machines in response.

This doesn’t seem to be yet another case of Silicon Valley’s overindulgence in wishful thinking about technology (blockchain, for example). There’s something real in generative AI. But to get there, we may need to figure out new ways to program, accepting probability rather than certainty as a desirable outcome.

AI coding assistants limited but helpful, developers say 15 Feb 2025, 2:27 am

AI coding assistants still need to mature, but they are already helpful according to several attendees of a recent Silicon Valley event for software developers.